Difference between revisions of "Controlling Quarter"

| Line 18: | Line 18: | ||

#*[[Grade]]. A category or rank used to distinguish items that have the same functional use (e.g., "hammer"), but do not share the same requirements for quality (e.g., different hammers may need to withstand different amounts of force). | #*[[Grade]]. A category or rank used to distinguish items that have the same functional use (e.g., "hammer"), but do not share the same requirements for quality (e.g., different hammers may need to withstand different amounts of force). | ||

#*[[Six sigma]]. An approach that is designed to improve quality and lower the number of defects. This approach aims for six standard deviations between the mean and the nearest specification limit in any process. | #*[[Six sigma]]. An approach that is designed to improve quality and lower the number of defects. This approach aims for six standard deviations between the mean and the nearest specification limit in any process. | ||

| − | #'''[[Heuristic review]]'''. Evaluating a [[ | + | #'''[[Heuristic review]]'''. Evaluating a [[market exchangeable]] or a [[process]] and documenting usability flaws and other areas for improvement. |

#*[[Customer journey map]]. A holistic, visual representation of your users' interactions with your organization when zoomed right out (usually captured on a large canvas). | #*[[Customer journey map]]. A holistic, visual representation of your users' interactions with your organization when zoomed right out (usually captured on a large canvas). | ||

#*[[Experience map]]. A holistic, visual representation of your users' interactions with your organization when zoomed right out (usually captured on a large canvas). | #*[[Experience map]]. A holistic, visual representation of your users' interactions with your organization when zoomed right out (usually captured on a large canvas). | ||

Latest revision as of 03:47, 6 May 2023

Controlling Quarter (hereinafter, the Quarter) is a lecture introducing the learners to operations research primarily through key topics related to controlling. The Quarter is the second of four lectures of Operations Quadrivium, which is the third of seven modules of Septem Artes Administrativi (hereinafter, the Course). The Course is designed to introduce the learners to general concepts in business administration, management, and organizational behavior.

Contents

Outline

Monitoring Quarter is the predecessor lecture. In the enterprise research series, the previous lecture is Business Analysis Quarter.

Concepts

- Controlling. Management function that involves comparing actual work performance with planned one, analyzing variances, evaluating possible alternatives, and suggesting appropriate corrective actions as needed.

- Feedback control. Control that takes place after a work activity is done.

- Concurrent control. Control that takes place while a work activity is in progress.

- Feedforward control. Control that takes place before a work activity is done.

- Control process. A three-step process of measuring actual performance, comparing actual performance against a standard, and taking managerial action to correct deviations or inadequate standards.

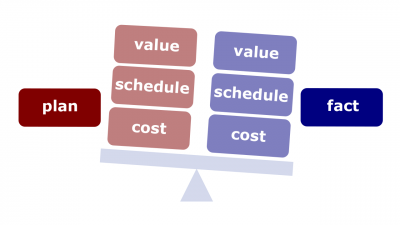

- Variance analysis. Analysis of discrepancies between planned and actual performance, to determine the magnitude of those discrepancies and recommend corrective and preventative action as required.

- Range of variation. The acceptable parameters of variance between actual performance and the standard.

- Quality control (QC). 1) Enterprise efforts undertaken in order to monitor specific outputs from specified processes to determine if they comply with requirement specifications, customer expectations, and relevant quality standards and, if they don't, identify ways to eliminate causes of unsatisfactory performance. 2) The organizational unit that is assigned responsibility for quality control.

- Quality attribute. The subset of nonfunctional requirements that describes properties of the software's operation, development, and deployment (e.g., performance, security, usability, portability, and testability).

- Quality. The degree to which a set of inherent characteristics fulfills requirements. In other words, quality is the ability of a market exchangeable to reliably do what it is supposed to do and to satisfy customer expectations.

- Grade. A category or rank used to distinguish items that have the same functional use (e.g., "hammer"), but do not share the same requirements for quality (e.g., different hammers may need to withstand different amounts of force).

- Six sigma. An approach that is designed to improve quality and lower the number of defects. This approach aims for six standard deviations between the mean and the nearest specification limit in any process.

- Heuristic review. Evaluating a market exchangeable or a process and documenting usability flaws and other areas for improvement.

- Customer journey map. A holistic, visual representation of your users' interactions with your organization when zoomed right out (usually captured on a large canvas).

- Experience map. A holistic, visual representation of your users' interactions with your organization when zoomed right out (usually captured on a large canvas).

- Content audit. Reviewing and cataloguing an existing repository of content.

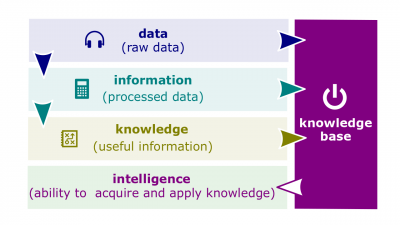

- Enterprise information. The enterprise data processed for purposes to make decisions.

- Information. (1) Facts provided or learned about something or someone; (2) Processed data; (3) What is conveyed or represented by a particular arrangement or sequence of things.

- Data processing. A series of operations on data, especially by a computer, to retrieve, transform, or classify information.

- Controlled processing. A detailed consideration of evidence and information relying on facts, figures, and logic.

- Automatic processing. A relatively superficial consideration of evidence and information making use of heuristics.

- Data analysis. Activity for gaining insight from data.

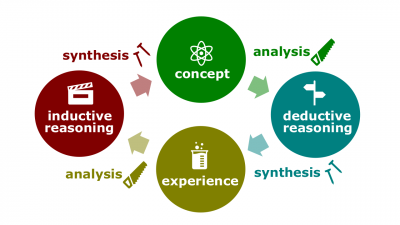

- Analysis. Detailed examination of the elements or structure of something, typically as a basis for discussion or interpretation.

- Analysis paralysis (or paralysis by analysis). The state of over-analyzing or over-thinking a situation so that a decision or action is never taken, in effect paralyzing the outcome.

- Data review. Review of capabilities and performance related data to determine its adequacy.

- Data analytics. The discovery, interpretation, and communication of meaningful patterns in data. Data analytics is a broader term than data analysis, which is one of the analytics components. Analytics defines the science behind the analysis. Data analytics applied to enterprise administration is called business intelligence.

- Analytics. A broad term that encompasses a variety of tools, techniques and processes used for extracting useful information or meaningful patterns from data.

- Data reliability. The trustworthiness of data; this trustworthiness is a result of analysis of (a) content reliability, (b) source reliability, and (c) data intent.

- Content reliability. The trustworthiness of the content.

- Source reliability. The trustworthiness of the data source.

- Data intent. Intention or purpose with which data was created.

- Fact-based data. Data created with the intent to provide its users with facts.

- Opinion-based data. Data created with the intent to provide its users with opinions.

- Agenda-based data. Data created with the intent to provide its users with information desired to accommodate one's business goals or agendas.

- Data model. A model that depicts the logical structure of data, independent of the data design or data storage mechanisms. A data model is the result of a collaborative effort between business end-users and IT database analysts. The first step is to define in plain English what data the business needs in order for its various functions to communicate with each other, and how this data must be ordered and structured so it makes the most sense. The second step is for the data analysts and other IT staff to devise a technical data base, a data storage and security plan, and a plan that enables application and analytic report development using this data. Together, these processes result in a data model for the business.

- Optionality. Defining whether or not a relationship between entities in a data model is mandatory. Optionality is shown on a data model with a special notation.

- Cardinality. The number of occurrences of one entity in a data model that are linked to a second entity. Cardinality is shown on a data model with a special notation, number (e.g., 1), or letter (e.g., M for many).

- Data flow diagram (DFD). An analysis model that illustrates processes that occur, along with the flows of data to and from those processes.

- State diagram. An analysis model showing the life cycle of a data entity or class.

- Sequence diagram. A type of diagram that shows objects participating in interactions and the messages exchanged between them.

- Class model. A type of data model that depicts information groups as classes.

- Snapshot. A view of data at a particular moment in time.

- Data dictionary. An analysis model describing the data structures and attributes needed by the system.

- Data entity. A group of related information to be stored by the system. Entities can be people, roles, places, things, organizations, occurrences in time, concepts, or documents.

- Attribute. A data element with a specified data type that describes information associated with a concept or entity.

- Glossary. A list and definition of the business terms and concepts relevant to the solution being built or enhanced.

- Jargon. Specialized terminology or technical language that members of a group use to communicate among themselves.

- Categorization.

- Structural rule. Structural rules determine when something is or is not true or when things fall into a certain category. They describe categorizations that may change over time.

- Knowledge area. A group of related tasks that support a key function of business analysis.

- Schema. The structure that defines the organization of data in a database.

- Data mart. A small data repository that is focused on information for a specific subject area of the company, such as Sales, Finance, or Marketing.

- Data warehouse. A data repository that deals with multiple subject areas (or data marts).

- Enterprise research. Detailed examination of the enterprise data in order to obtain enterprise information.

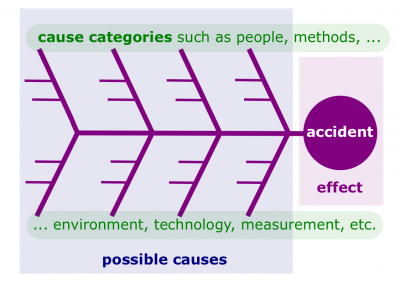

- Root-cause analysis. A structured examination of an identified problem to understand the underlying causes using a fishbone diagram.

- Return on investment (ROI). A financial measurement that accesses how profitable investments are. This is calculated by dividing the expected return (profit) by the financial outlay. In other words, [[ROI is the money an investor gets back as a percentage of the money he or she has invested in a venture. For example, if a venture capitalist invests $2 million for a 20 percent share in a company and that company is bought out for $40 million, the venture capitalist's return is $8 million.

- "Boiled frog" phenomenon. A perspective on recognizing performance declines that suggests watching out for subtly declining situations.

- Performance. (1) An act of staging or presenting; (2) The action or process of carrying out or accomplishing an action, task, or function; (3) The combination of effectiveness and efficiency at undertaking an enterprise effort.

- Organizational performance. A comprehensive review of the original specification, statement of work, scope and contract modifications, with a purpose to avoid pitfalls in future procurements.

- Contractor performance. A comprehensive review of contractor's technical and cost performance and work delivery schedules.

- Job analysis. An assessment that defines jobs and the behaviors necessary to perform them.

- Work-in-progress limit (WIP). refers to work that is currently being developed and not yet ready to be released as a deliverable. For Scrum teams, this would apply to the work being accomplished during a sprint. For Kanban teams, this refers to work that has been pulled from the backlog and is being developed, indicated by cards in the ‘Doing’ or ‘Work-in-Progress’ column of the Kanban task board.

- Productivity. (1) The amount of goods and services produced divided by the inputs needed to generate that output; (2) The combination of the effectiveness and efficiency.

- Organizational effectiveness. A measure of how appropriate organizational goals are and how well those goals are being met.

- Graphic rating scale. An evaluation method in which the evaluator rates performance factors on an incremental scale.

- Breakeven analysis. A technique for identifying the point at which total revenue is just sufficient to cover total costs.

- Burn rate. “The rate at which a new company uses up its venture capital to finance overhead before generating positive cash flow from operations. In other words, it’s a measure of negative cash flow.” (Source: Investopedia) When your burn rate increases or revenue falls it is typically time to make decisions on expenses (eg reduce staff).

Roles

- Management analyst. A professional who conducts organizational studies and evaluations, designs systems and procedures, conducts work simplification and measurement studies, and prepares operations and procedures manuals to assist management in operating more efficiently and effectively. Sometimes, this role is called management consultant.

Methods

- Peer review. A validation technique in which a small group of stakeholders evaluates a portion of a work product to find errors to improve its quality.

- Walkthrough. A type of peer review in which participants present, discuss, and step through a work product to find errors. Walkthroughs of requirements documentation are used to verify the correctness of requirements. See also structured walkthrough.

- Structured walkthrough. A structured walkthrough is an organized peer review of a deliverable with the objective of finding errors and omissions. It is considered a form of quality assurance.

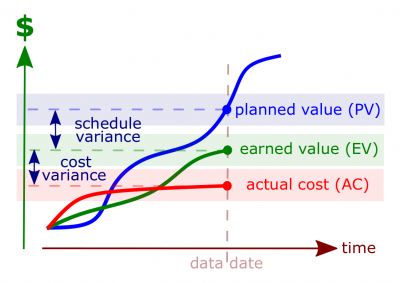

- Earned value management (EVM). A method for integrating scope, schedule, and resources, and for measuring project performance. It compares the amount of work that was planned with what was actually earned with what was actually spent to determine if cost and schedule performance are as planned.

- Earned value (EV). The physical work accomplished plus the authorized budget for this work. The sum of the approved cost estimates (may include overhead allocation) for activities (or portions of activities) completed during a given period (usually project-to-date). In other words, this value is the budgeted cost of work performed for an activity or group of activities.

- Actual cost (AC). The actual amount of money either paid for material or charged as labor, material, or overhead to a work order. In other words, actual costs are total costs incurred that must relate to whatever cost was budgeted within the planned value and earned value (which can sometimes be direct labor hours alone, direct costs alone, or all costs including indirect costs) in accomplishing work during a given time period.

- Planned value (PV). The physical work scheduled, plus the authorized budget to accomplish the scheduled work. Previously, this was called the budgeted costs for work scheduled (BCWS).

- Gap analysis. A comparison of the current state and desired future state of an organization in order to identify differences that need to be addressed.

- Gap analysis. A study of whether the data that a company has can meet the business expectations that the company has set for its reporting and BI, and where possible data gaps or missing data might exist.

- Parametric estimating. An estimating technique that uses a statistical relationship between historical data and other variables (e.g., square footage in construction, lines of code in software development) to calculate an estimate.

- Opportunity cost. The loss of potential gain from other alternatives when one choice is made.

- Data-analysis technique. An established procedure for data analysis.

- Investigation. The formal or systematic examination of data sources that uses one or more data-gathering techniques and is conducted in order to gather data and/or assess data reliability.

- Slice and dice. Data manipulation tools in reporting or spreadsheet software that allow users to view data from any angle.

- Five Why's. An iterative interrogative technique, which essence is a series of why-questions and which is used to explore the cause-and-effect relationships underlying a particular problem.

Instruments

- Effort performance indicator.

Schedule Budget EV<PV EV=PV EV>PV EV<AC EV=AC EV>AC Behind schedule On schedule Ahead of schedule Above budget On budget Below budget - Schedule variance (SV). 1) Any difference between the scheduled completion of an activity and the actual completion of that activity. 2) In earned value, EV less BCWS = SV.

- Schedule performance index (SPI). The schedule efficiency ratio of earned value accomplished against the planned value. The SPI describes what portion of the planned schedule was actually accomplished. The SPI = EV divided by PV.

- Cost variance (CV). 1) Any difference between the budgeted cost of an activity and the actual cost of that activity. 2) In earned value, EV less ACWP = CV.

- Cost performance index (CPI). The cost efficiency ratio of earned value to actual costs. CPI is often used to predict the magnitude of a possible cost overrun using the following formula: BAC/CPI = projected cost at completion. CPI = EV divided by AC.

- Quality-control tool. A tangible and/or software implement used to control quality.

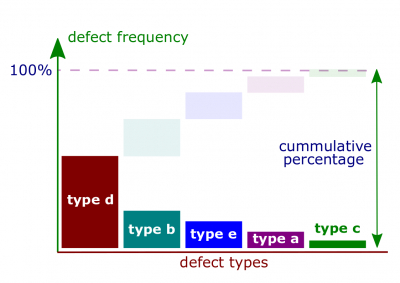

- Pareto diagram. A quality-control tool that represents a histogram, ordered by frequency of occurrence, that shows how many results were generated by each identified cause.

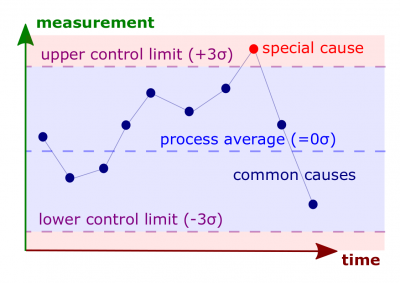

- Control chart. A quality-control tool that represents a graphic display of the results, over time and against established control limits, of a process. They are used to determine if the process is in control or in need of adjustment.

- Fishbone diagram. A quality-control tool that represents a diagram and is used in root-cause analysis to identify underlying causes of an observed problem, and the relationships that exist between those causes.

- Checklist. A quality-control tool that includes a standard set of quality elements and/or possible risks that reviewers use for requirements verification and requirements validation or be specifically developed to capture issues of concern to the project.

- Data-analysis tool. A tangible and/or software implement used to analyze data.

- Database. A collection of data arranged for convenient and quick search and retrieval by applications and analytics software.

- Management information system (MIS). A system used to provide management with needed information on a regular basis.

- Digital tool. Technology, systems, or software that allow the user to collect, visualize, understand, or analyze data.

- Performance management system. Establishes performance standards used to evaluate employee performance.

- Balanced scorecard. A performance-management tool that holistically captures an organization's performance from several vantage points (e.g., sales results vs. inventory levels) on a single page. The scorecard indicates more than just the financial results.

Practices

Effort Engineering Quarter is the successor lecture. In the enterprise research series, the next lecture is Human Motivations Quarter.